The paradox of boundless intelligence and the necessity of human limits.

This super-interesting Psychology Today article examines the fundamental differences between human and artificial intelligence (HI and AI). The author (John Nosta) argues that human cognition is uniquely defined by constraints, such as information scarcity and the weight of consequences, which force us to think deeply and take ownership of our decisions. In contrast, AI operates without any such friction, producing fast and fluent results that lack the effort and thus the accountability inherent to the human experience. While technology can process data with superior speed, it remains “weightless” (I guess that is another word for meaningless) because it does not have to live with the outcomes of its choices. Ultimately, the artcile suggests that true intelligence is not just about computational power but about the risks and responsibilities that shape human understanding.

Some quotes I like most:

The most important change introduced by advanced AI may not be how intelligent our systems become, but the conditions under which intelligence now operates. Here's my thesis: Across human history, thinking was shaped by limits. Information was incomplete, and mistakes carried real consequences. These were not inconveniences to be engineered away. They were the pressures that formed judgment.

How Judgment Was Formed

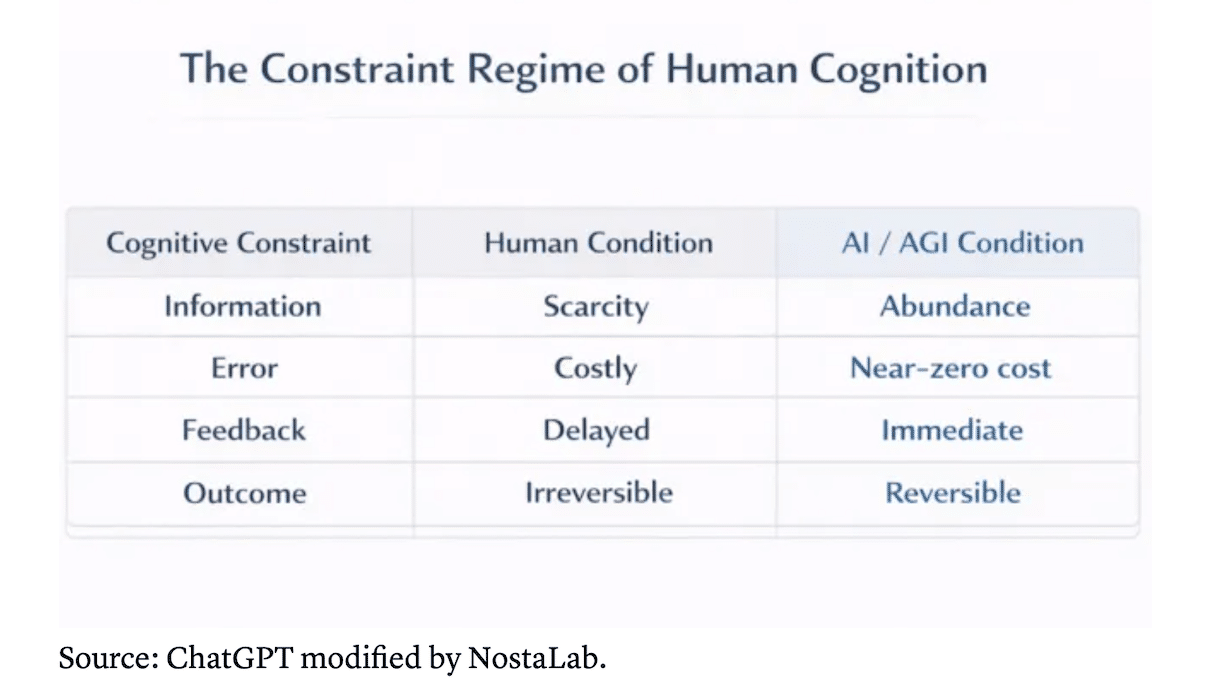

Human cognition didn't evolve as some sort of frictionless optimization engine. Cognition found itself in a world that demanded care and attention. When information was scarce, attention mattered. We learned to notice and infer because we had no choice. When mistakes were costly, our judgment slowed because a wrong decision could have significant consequences or even impact survival itself. When feedback was delayed, reflection and analysis became essential. And when outcomes were irreversible, this curiously human thing called responsibility followed. It's important to recognize that these limits did not hinder intelligence; they shaped it. This “training” didn't occur randomly. Human cognition emerged within a specific constraint regime, one that was shaped over time. Stripped to its essentials, those constraints look like this (below)

“Scarcity sharpened attention, cost made accuracy matter, delay required reflection, and irreversibility imposed ownership. Over time, judgment emerged as an adaptation to consequence”

Intelligence Without Pressure

AI operates under the inverse of this construct. Information is abundant, errors are cheap, feedback is immediate, and outputs can be revised endlessly. And this is the structural consequence of high-velocity computation. AI does not simply think faster; it thinks without exposure to consequence. When these pressures disappear at once, intelligence does not vanish, but its behavior changes. Structure arrives fully formed, and decisions no longer carry the same internal weight because nothing truly sticks. This reversal—what might be called anti-intelligence—is subtle but important. Intelligence no longer earns confidence by wrestling with uncertainty. Confidence emerges instead from coherence and speed.

The Problem of Weightlessness

The concern here is not that AI will be wrong. It is that its outputs will feel authoritative without having been earned. Fluent answers arrive polished, and that fluency reads (misreads) as confidence. Yet this confidence isn't the product of the survival of error but a by-product of statistical completion. When conclusions arrive without struggle, they can be accepted without ownership. Simply put, if they fail, nothing breaks.

Source: John Nosta